CRISIS COMPUTING: Multimodal Social Media Content for Improved Emergency Response

Motivation

Multimodal data shared on Social Media during critical emergencies often contain useful information about a scale of the events, victims and infrastructure damage. This data can provide local authorities and humanitarian organizations with a big picture understanding of the emergency. Moreover, it can be used to effectively and timely plan relief responses.

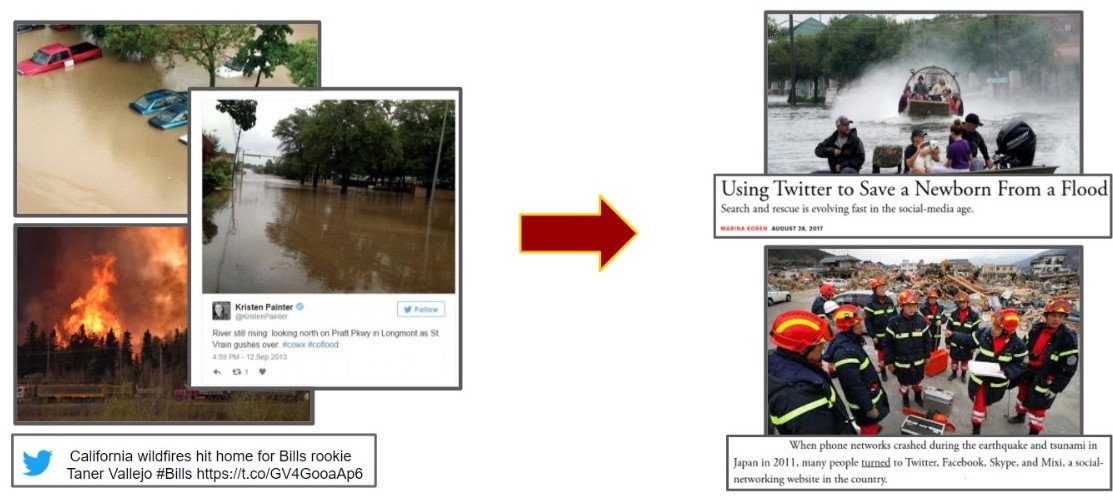

Challenge 1: One of the biggest challenges is handling social media information overload. To extract relevant information a computational system needs to process massive amounts of data and identify which data is INFORMATIVE in the context of disaster response.

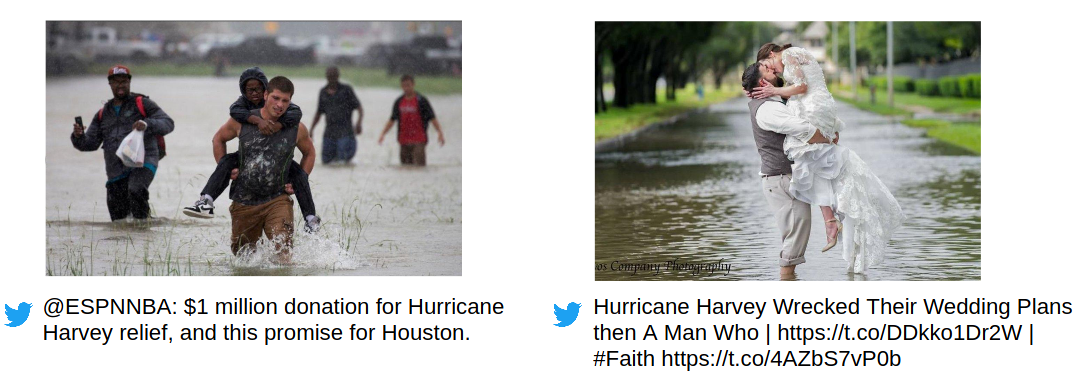

The somehow subtle differences in visual characteristics of 2 images from CrisisMMD Dataset. Both images were published on Twitter between 27th August 2017 and 3rd September 2017.

Challenge 2: Another issue in the field is that of data scarcity. To develop effective applications that could assist in crisis response, researchers need access to the large-scale annotated dataset.

In our work, we chose to explore current methodologies that can help alleviate these challenges. We decided to pursue the following 2-fold problem statement.

Problem Statement

- Explore different techniques of representation learning to improve performance on nuance classification of informative vs. non-informative social media posts in the domain of crisis computing.

- Investigate applications of unsupervised and semi-supervised learning methods to mitigate the issue of labeled data scarcity on the classification task.

Methods

REPRESENTATION LEARNING: CONTRASTIVE LEARNING

To improve classification accuracy on informative vs. non-informative classification task we decided to use supervised methods that produce meaningful, low-dimensional representations of the data.

SupCon Overview

For our images, we use a contrastive learning paradigm that is well-suited for embedding nuanced concepts.

We aim to learn useful representations of the two classes so that the downstream classification task becomes easy. Contrastive learning, in a nutshell, tries to pull clusters of points belonging to the same class close together in the embedding space, while simultaneously pushing apart the clusters of samples from different classes

There are many unsupervised methods for this type of problem but we chose to use the Supervised Contrastive Learning algorithm where we leveraged the labeled information due to the nature of our classification task. Once the model is trained, we use it to the created embeddings for downstream tasks, as well as, for a never-seen subset of the data, and check the accuracy of the model through kNN.

How SupCon works?

- Given an input batch of data, we first apply data augmentation twice to obtain two copies of the same batch. Both the copies are forward propagated through the encoder network to obtain a 2048-dimensional normalized embedding. The network learns about these transformations, what it means to come from the same image, how to spread data in embedding space, etc.

- During training, this representation is further propagated through a projection network which is discarded at inference time. The supervised contrastive loss is then computed on the outputs of the projection network

- Can use linear classifier or KNN to predict the classes of new examples. The contrastive loss maximizes the dot products of embeddings from similar classes and separates the positive samples from negatives using labels to make the distinction.

REPRESENTATION LEARNING: SENTENCE EMBEDDINGS

Fine-Tuned DistilBERT

To create sentence embeddings that perform well in the crisis computing context, we decided to fine-tune the DistilBERT model (from hugging face library) on our downstream task of tweets classification. The model is pre-trained on the GloVe Twitter 27 B embeddings. First, we preprocess the tweets: we remove stop words, URLs, hashtags, and punctuation.

Next, we use the DistilBERT tokenizer. We trained the model for 4 epochs with a batch size of 16. We use Adam optimizer with weight decay of 0.01 and a custom weighted loss function that compensates for the unbalanced dataset.

We extract sentence embeddings from the fine-tuned model by averaging all the final hidden layers of all the tokens in the sentences. The latent representations are obtained for the training data and test data that the model has not seen before. We use embeddings during our early fusion architecture.

SEMI-SUPERVISED LEARNING

FixMatch

To address the issue of data scarcity, we use semi-supervised learning in the form of consistency regularization and pseudo-labeling. Our goal is to provide a label to the unlabeled images in our dataset to obtain a large-scale annotated dataset for developing effective applications.

FixMatch uses both the approaches together to generate highly accurate labels, by following a two-step method:

- Creates a weakly-augmented version of the unlabeled image using basic augmentations like flip-and-shift, and produces a pseudo-label for it using the model’s predictions, which is retained only if it’s confidence is above a specific threshold.

- Feeds a strongly-augmented version of the same image to the model and trains it with pseudo-label as the target using cross-entropy loss.

FixMatch requires extensive GPU utilization and we aim to obtain higher accuracy with better GPUs available.

MULTIMODAL LEARNING

Information from a single source is adequate but wouldn’t it be better to get additional information from multiple sources? Exactly! There are multiple sources of data for a single problem at hand. These sources offer complementary information that not only helps to improve the performance of the model but also enables the model to learn better feature representations by utilizing the strengths of individual modalities.

For instance, visual information from images is very sparse, whereas a piece of textual information for the same is more expressive. Combining these two gives us enriched information about the scene at hand. We attempt to employ this intuition by exploring early and late fusion techniques to achieve robust performance.

The Power of Multimodal Data

[Image source: Olfi et al. 2020]

Experiments

MULTIMODAL LEARNING: Late Fusion

To handle the modalities of the dataset, we combine the representations of text and image by performing three late fusion techniques. We combine our best models: Bi-LSTM for textual data and ResNet-50 for the pictures, and compare the results with the best baselines from Gautam et al.

Our improved model can beat the baselines with a large margin in all three fusion techniques viz. Mean Probability Concatenation, Custom Decision Policy, and Logistic Regression Decision Policy.

-

We saw improved performances in all these techniques because of efficient base models i.e. ResNet-50 for image modality and Bi-LSTM for text modality with better accuracies than the baselines.

-

In the case of Custom Decision Policy, we implemented 2 fully connected layers with ReLU activation function in the first 128 dim layer and Sigmoid in the last layer. We trained the model for 30 epochs with Adam optimizer and BCE Loss to obtain a 0.08 training-loss.

From the above confusion matrices it is quite evident that our model has a higher AUCROC value since it can distinguish between the two classes effectively. The model identifies a large amount of true positives and true negativies thereby making it a robust for the task. The accuracy of these techniques can be further increased with better and deeper base architectures for text and image modalities.

MULTIMODAL LEARNING: Early Fusion

Next, we explore the early fusion paradigm where multimodal data representations are combined at the level of hidden layers in a deep learning architecture.

Our work is guided by recent publication by Olfi et al. who provides baseline results on CrisisMMD dataset using current deep learning architectures.

The authors proposed multimodal architecture that consists of VGG16 network and CNN RNN network. Each of the networks produces a 1x1000 representation of the given data modality. Once the vectors are merged, the shared representation is fed into one hidden layer and softmax layer.

In our early fusion architecture, we decided to combine our SupCon image embeddings of size 1x128 and fine-tunes DistilBERT sentence embeddings of size 1x 64.

We chose the image embeddings to be twice the size of the text embeddings.

Our results prove the importance of task-specific representations.

| Modality | Olfi et al. | Ours |

|---|---|---|

| Text | 0.808 | 0.84 |

| Image | 0.833 | 0.89 |

| Text + Image | 0.844 | 0.91 |

Label Data Scarcity

To alleviate the issue of data scarcity we orginally planned to use FixMatch for images and DeCLUTR for text. However, the tweets are too short to fine-tune DeCLUTR. We successfully trained FixMatch but the process was very long and very computationally expensive. Taking from the success of our embeddings, we decide to use our trained encoders to obtain representations for the images and textual tweets in the test set and then use kNN for the classification purposes. We planned to retrain our architectures on augmented training set (adding pseudo-labeled) data and examine their infulence on the models performance. Due to the time constraints we were not able to complete that task.

| Model | Modality | Accuracy |

|---|---|---|

| kNN with SupCon | Image | 75.9% |

| DistilBERT | Text | 73.4% |

| FixMatch | Image | 72.9% |

Embeddings Visualization via t-SNE

We visually examine embeddings produced by our models by applying t-sne algorithm.

The above scatter plot depicts one of the best results we have obtained during the training of our Supervised Contrastive model. We can see that the model has done a great job at segregating the informative and non-informative images. However, there have been some scenarios where our network fails to classify the images. We noticed that this issue arises as a consequence of a large subset of pictures that depict statistical data about the disaster in the form of graphs, pie charts, and more. For example, we observe that all the images with statistical data in the training set belong to the "informative" class. The pictures in the CrisisMDD dataset showed a bias towards line graph pictures - most of the images of the line graph belong to the "informative" class. As a result, our model had a lot of false positives when tested on unseen data e.g. random images downloaded from google.com that contain line plot graphs. In our future work, we should modify our model to understand the graph images through text extraction.

Future Directions

With the increasingly noticeable impact of global warming on our climate, the disaster impact analysis and estimation became a critical issue. We hope to contribute to the field and help mitigate the lack of situational awareness by helping to develop a computational system for automated disaster summaries creation.

Specifically:

- We aim to include more labels like disaster threat levels, type of emergencies, etc., to encompass a detailed report of incidents.

- Apart from text and images we plan to include different modalities to further improve our existing models.

- With access to more computing time ( better/more GPUs), we wish to train the contrastive models for more epochs and achieve an accuracy as high as 90%, thereby improving the feature representations.

- We also intend to improve label propagation techniques and automatically generate a coherent visual-text summary report about an emergency event/disaster and develop a metric to evaluate the quality of such a report.